For digital exhibitions at museums and heritage sites, the search functionality in particular takes advantage of the electronic medium: the search capabilities, when done well, engage visitors and prompt them to find their own pathways through an otherwise impenetrable list of artifacts.

Good search experiences should create meaningful itineraries through exhibition artifacts–similar to good physical space exhibition design. When representing your exhibition digitally, it’s worth thinking along these lines: a search experience should be designed to meet your visitors’ interest and make them want to wander through your virtual space.

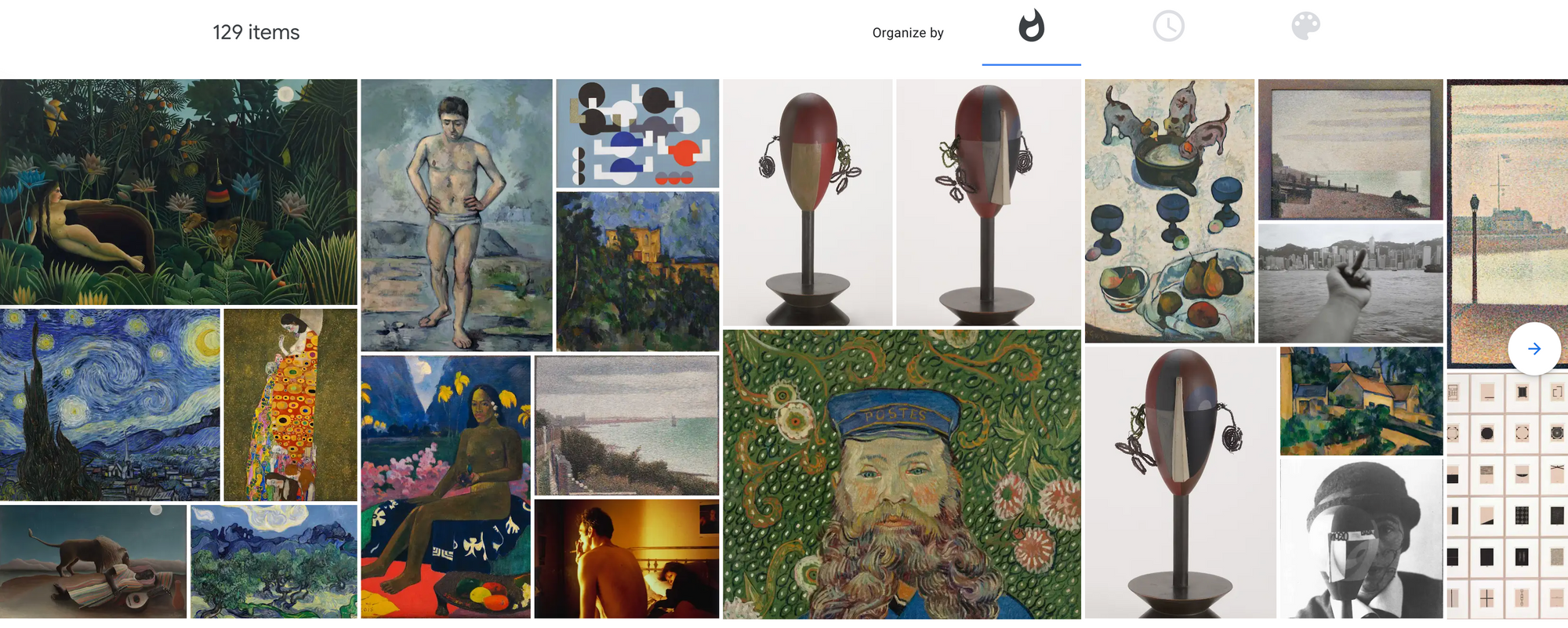

For one example, the mosaic of artifacts on Google Arts & Culture’s digital exhibitions allows visitors to organize their search by most viewed, chronological time period, and most prominent color.

But this is an example of search for general public visitors to digital exhibitions, whereas search also enables important functionality for researchers. In the same way that a general public visitor will use the physical space of a museum very differently than a researcher, a general public visitor has very different needs from search. Because digital exhibitions tend to be built for storytelling around a collection to reach a non-research-oriented audience, this blog will cover a search experience for exhibition visitors instead of researchers.

Omeka

First stop on the overview of search experiences is an example of a digital archive built with Omeka, the Appalachian Dulcimer Archive. The search interface initially allows visitors to browse the full archive in a list and in subsequent pages in the submenu, it enables visitors to browse the archive by a list of tags, then by advanced faceted search, then by a map-based view that shows a distribution of the origins of the artifacts in the archive. Sort order in these pages is fixed.

More powerful searches for large collections are made possible on Omeka by plugins such as the Omeka<>Elasticsearch integration.

Examples

Appalacian Dulcimer Archive Search interface: https://dulcimerarchive.omeka.net/items/browse

Big Stuff Heritage: https://bigstuffheritage.org/?s=

Hermes Digital Heritage Management: https://hermoupolis.omeka.net/items/browse

Spotlight

Spotlight search is a unified experience with a list of items presented next to faceted search tools. Users may enter a keyword at the top right and then select the search facets they want in the left sidebar. Facets are presented with the count of the number of artifacts in the exhibition that are related to that facet. When a visitor selects a facet, the other facets that are not relevant are hidden.

If this looks familiar, this is very similar to the way that search works on Amazon.com. Spotlight allows you to sort the results by relevance, title, and date.

Examples

Parker Library Exhibition Search: https://exhibits.stanford.edu/parker/catalog?exhibit_id=parker&search_field=search&q=

List of Exhibits at Stanford University Libraries: https://exhibits.stanford.edu/

Google Arts & Culture

The search interface on Google Arts & Culture has quite a bit different design than the first two options. The first and most critical difference is that search is never limited to a single exhibition–on any search, a visitor always searches the entirety of exhibitions published on the Google Arts & Culture platform.

The search experience and functionality on Google Arts & Culture differs because there is no traditional advanced faceted search as presented in the first two platforms: instead, there is a single text search box with an autocomplete dropdown list that enables similar functionality as faceted search by populating the autocomplete with results from different types of data.

The autocomplete returns results from different types of data in the same list: so “Egypt” returns exhibits, partners, places, stories, and so on that feature the word “Egypt” somehow. Selecting one of the topics or places will yield a feature page that provides an overview of artifacts and stories related to that topic or place and an overview.

Additionally, as mentioned at the start of this blog, Google Arts & Culture offers a mosaic explore interface that allows visitors to browse the exhibition by ordering by “Most Popular” items, chronology, or primary color.

The GA&C search interface is important to understand for the sheer amount of design energy and thinking dedicated to creating exploration experiences for visitors. Some curators may spend long hours publishing their data online but with little to no interpretative materials, and as a result their digital exhibitions may feel as undirected and impenetrable as a physical exhibition with no wall text or other interpretive materials.

The search doesn’t directly relate to the Story feature on GA&C, but this is also an important interpretive tool for getting visitors to explore online collections, and I’ll cover this in more depth in a future blog post.

Examples

Google Arts & Culture Explore Page: https://artsandculture.google.com/explore

Egypt Place page on Google Arts & Culture: https://artsandculture.google.com/entity/egypt/m02k54

Mused

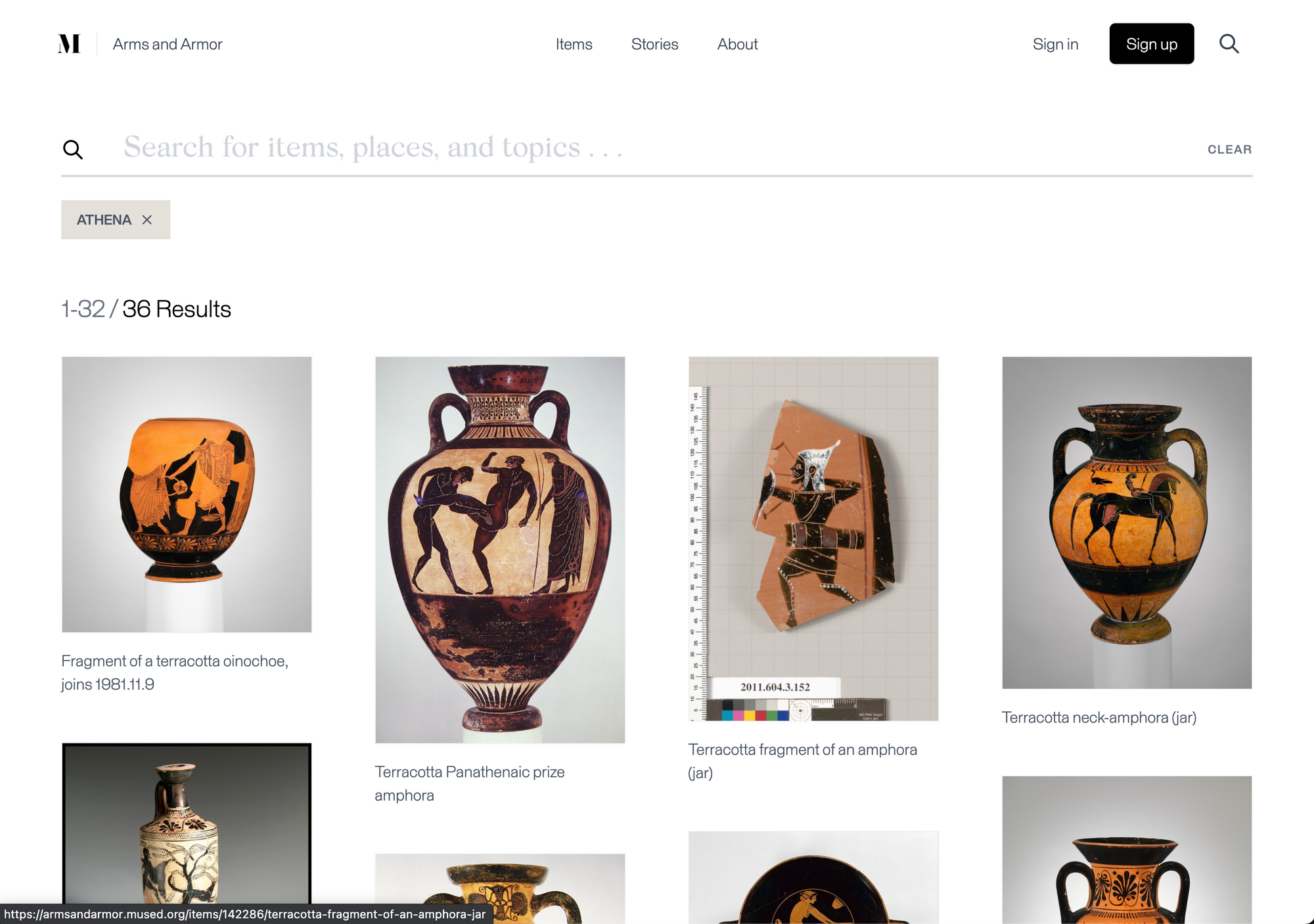

The Mused search interface is a search/exploration experience that enables discovery of artifacts powered by machine learning. Currently the textsearch searches artifact titles, descriptions, and metadata to provide a unified search experience that’s approachable for general public users.

The machine learning integration identifies metadata about each artifact to enable features such as searching by color and other salient features. This is made possible by utilizing the Data Grinder software which leverages state-of-the-art computer vision services to learn more about the images presented in each exhibition.

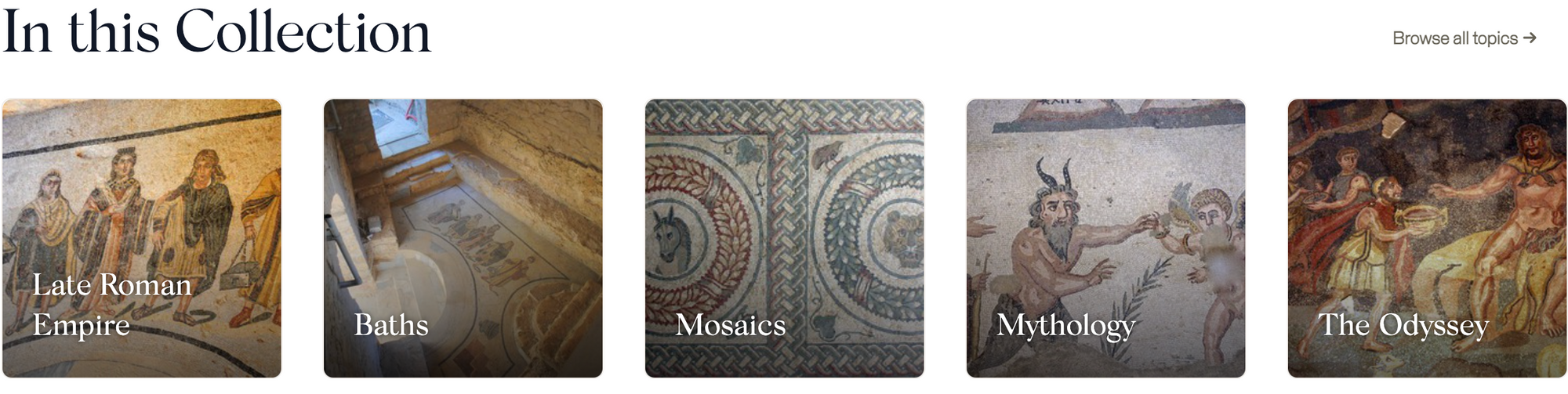

Related to search and discovery, each exhibition features Topics and Stories to provide in-roads to learning about the different artifacts in the exhibition.

That’s all, GLAM-orous humans! If there’s any other subjects that you’d like to know more about while building your digital exhibitions online, I’d be more than glad to cover them or interview people who are experts in that field. Let me know by commenting here, and as ever, if you want to keep on top of the latest in GLAM-related technologies, you can enter your email ✉️✨ to subscribe here. ✉